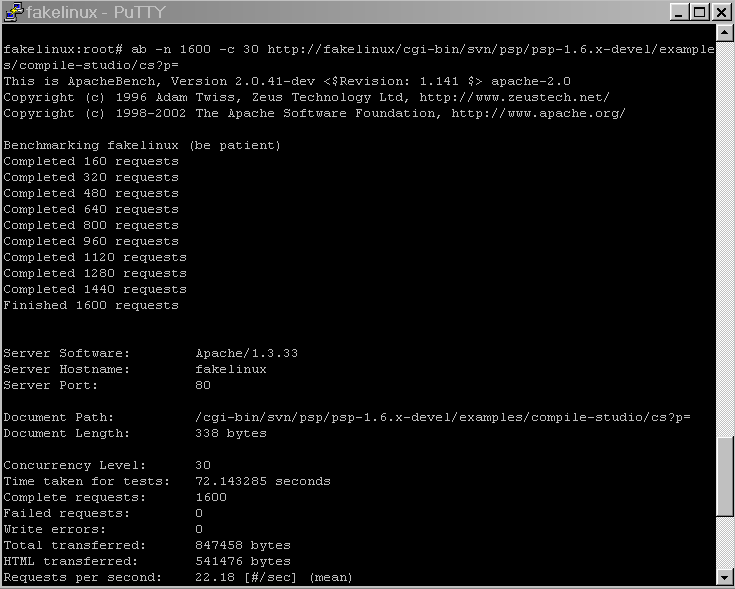

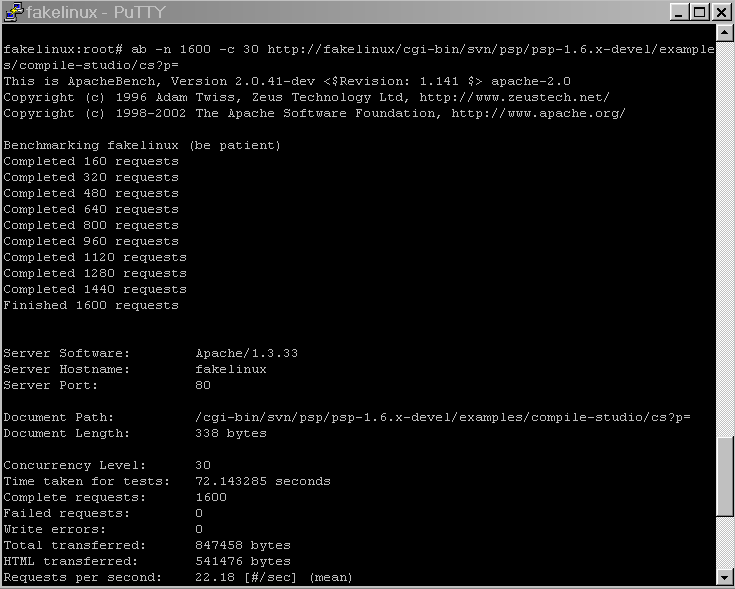

But bear with me and do a bit of math:

22 requests per second would be 79,200 requests per hour, or 1,900,800 per 24 hour day.

How many businesses get one or two million hits per day? Not many. For those that do - are they using emulators? No. Are they using crippled samba connections to access the files on some Windows machine through a unix machine? No. Do they usually have about 64mb or 128mb of memory on the 2 million hit servers? No. Do they usually have a 66 or 100 MHz bus speed using an older outdated processor? No.

You might be wondering - what is the point of these questions? Pay attention carefully:

How many businesses get one or two million hits per day? Not many.

The benchmark was done under biased conditions not in our favor, and yet most businesses should find it satisfactory for their website. Most benchmarks are done under biased conditions in the author's favor.

Take into account some more considerations:

Before we consider obsessing over speed - we must do some calculations and ask our customers (or family members, since many speed freaks build home pages for Grandpa which will obviously receive 3 billion hits) how busy their website could realistically and potentially become.

Most startup companies think they'll receive 1 billion web site hits in only minutes after they launch. In fact it's more like 10,000-500,000 hits per 24 hours, on a good day. What's "good" is extremely relative. For a website like Ebay, Yahoo, or Google: it is obviously very different than 99% of websites.

There are companies out there who would never see 200,000 hits per day, and yet they build servers and pay programmers by the hour to heavily focus on tuning the website so that it can receive 10 billion hits per day (bugs and memory leaks included, all done in plain C or assembly code). Everyone focuses on the amount of hits per second, without rigorously and critically analyzing actual realistic customer requirements first.

Most benchmarks are designed with a hello world application that runs for a few seconds. How about running the benchmark for a few weeks, to take into account memory leaks, especially in FastCGI where if a program is left running for a month there is no memory garbage collection of each leaky process? Indeed, most people do not even know of these issues since a benchmark is run for only a few seconds or mi nutes, not a few weeks or months.

If a customer tells you that they'd never get over 0.5 million hits per day, then would you still build a website that was capable of obtaining 3 trillion hits per day? Would this 3 trillion capable website work for a few days, and then crash in a few weeks? We have to take into account realistic business and customer requirements before obsessing ourselves with speed.

Benchmarks can never be taken too seriously - saying CGI is slow is a vague statement - almost like saying that "100 miles per hour is slow". Compared to what? A rocket ship going to mars? It depends on the requirements. 100 miles per hour is slow, and it is fast too. It's also relative. Speed is relative. Stupidity is also relative.

More benchmarks to come in the future. This is just an example of why we need to pull out our calculator from our desk and use it.